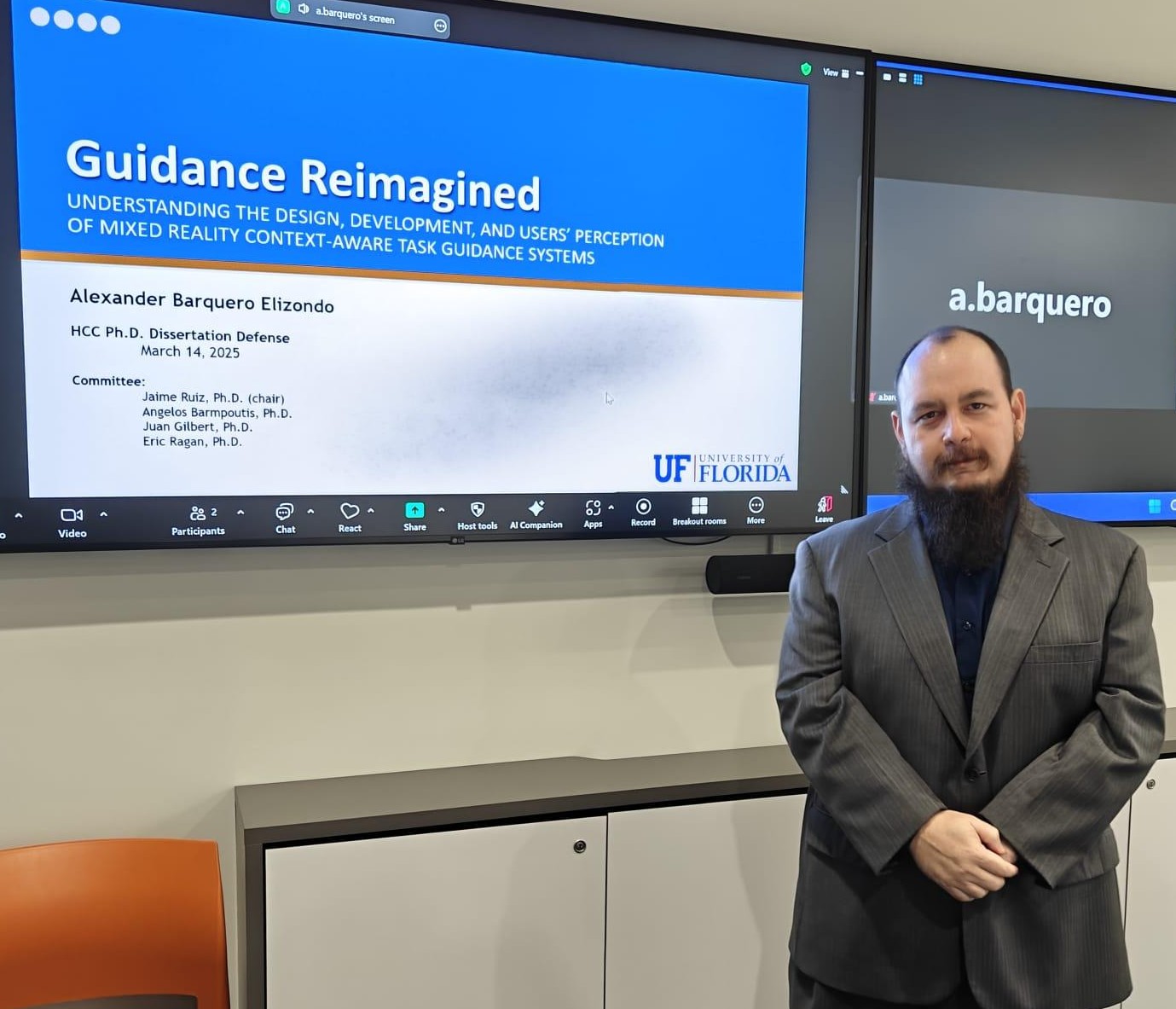

We are thrilled to announce that Alex Barquero has successfully defended his doctoral dissertation, titled:

We are thrilled to announce that Alex Barquero has successfully defended his doctoral dissertation, titled:

“Guidance Reimagined: Understanding the Design, Development, and Users’ Perception of Mixed Reality Context-Aware Task Guidance Systems.”

This milestone marks the culmination of years of dedicated research exploring how people can benefit from Mixed Reality (MR) technology in their daily tasks—specifically, how tailored guidance solutions can reduce completion time, lower error rates, and ease cognitive workload.

In his dissertation, Alex dives into the evolution of task guidance systems: from simple paper-based methods to complex, automated systems using wearable devices, sensors, and artificial intelligence. With Mixed Reality, physical and digital realms merge to create immersive, context-aware experiences that go beyond traditional instructions on a screen. Through thorough investigations in domains such as cooking, he uncovered the nuances of user interactions and the importance of tailored design in contexts where hands-free or gesture-based interaction is required.

Key findings from his research include:

- Identifying User Needs: By studying real user interactions and pain points, Alex established foundational requirements that can inform the creation of MR task guidance systems in various domains—not just in the kitchen, but in countless other settings (like industrial training, healthcare, and education).

- Designing Modular and Customizable Systems: He demonstrated how modularized MR platforms could adapt to different tasks, allowing for seamless integration of new guidance modalities and user interface elements.

- Exploring Gesture-Based Interactions: A gesture elicitation study provided critical insights into how people naturally interact with tasks in mixed reality—especially when their hands are occupied—and how best to design user-friendly gestures.

- Assessing Guidance Modalities and Language Factors: Through empirical testing, Alex evaluated how different guidance methods impact user efficiency, workload, and engagement. He also explored how a user’s primary language influences these results, underscoring the importance of inclusive, multilingual design practices.

Alex’s dissertation highlights the potential of human-centered Mixed Reality solutions to revolutionize how we perform everyday tasks, whether it’s assembling furniture, cooking a meal, or performing complex industrial procedures. By focusing on user needs, multimodal interface design, and language inclusivity, he has paved the way for more intuitive and accessible MR-based guidance systems.